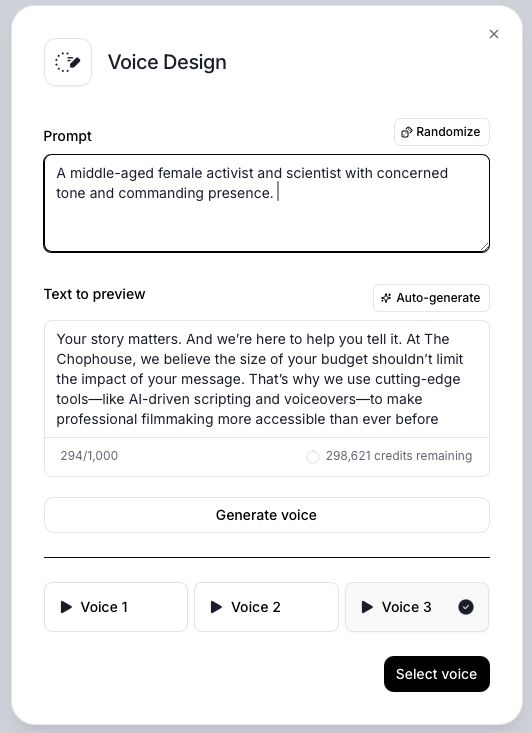

Above: The raw amplitude waveform of three different AI voices from Eleven Labs.

There’s a lot of talk about AI in the video industry. Eye catching capabilities like World Jumping are made possible with AI generated video using platforms like Runway and Adobe Firefly Add an AI generated voice from Eleven Labs, and you can transform anyone into anything or anyone else.

At The Chophouse, we’ve not had a project that needs world jumping, yet… But we’ve had a lot of success with AI assisted scripting and voiceover using Chat GPT and Eleven Labs. These platforms combined create compelling content quickly, and at a remarkably reduced cost compared to hiring a professional voiceover (VO) artist and trying to write a script by yourself.

Is this the end for professional voiceover artists?… Not yet.

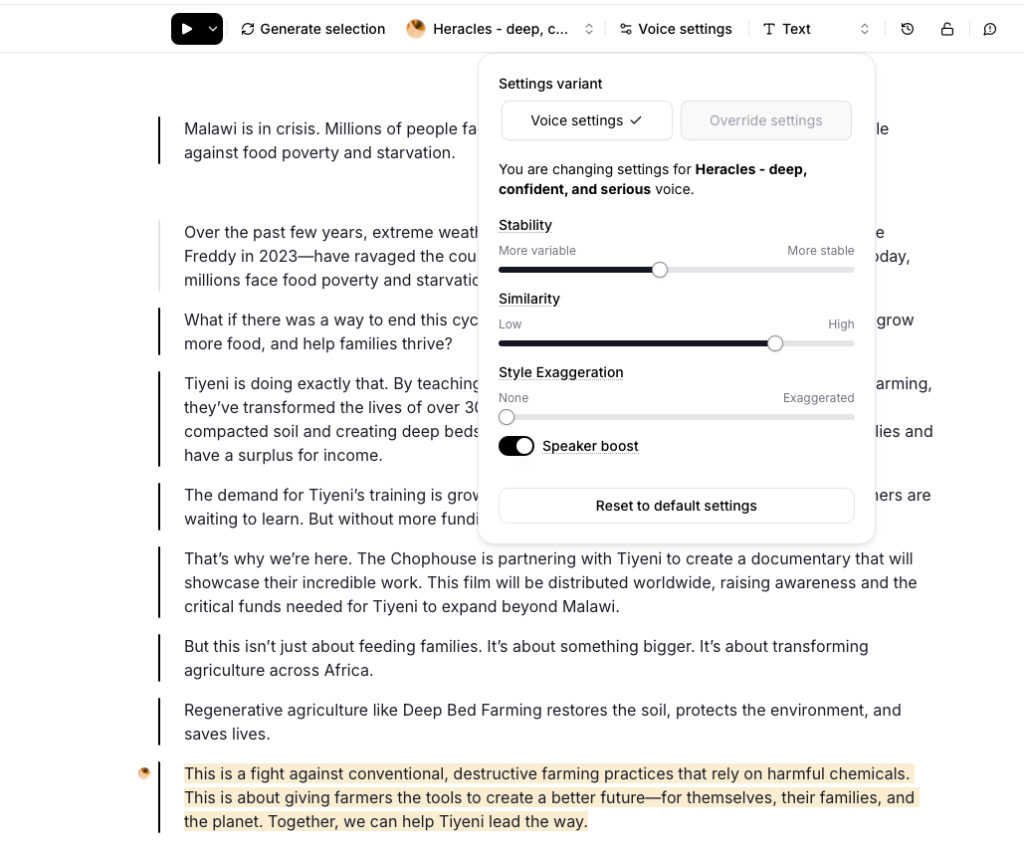

Above: The Project UI in Eleven Labs.

Pros

- Speed

There are several key positive benefits of working with AI voices. The first is speed. Not just in the first generation, but in its ability to regenerate variations instantly. Humans just can’t be that creative that fast without some effect on the person. If I need the AI to redo a section of content, I just hit the,“Generate Section” button and wait a few seconds for the new version. There are also adjustments you can make to the AI voice properties and the sentence prompt to clarify the emotion you’re looking for. This has limitations that we’ll get to later.

2. Availability and variety

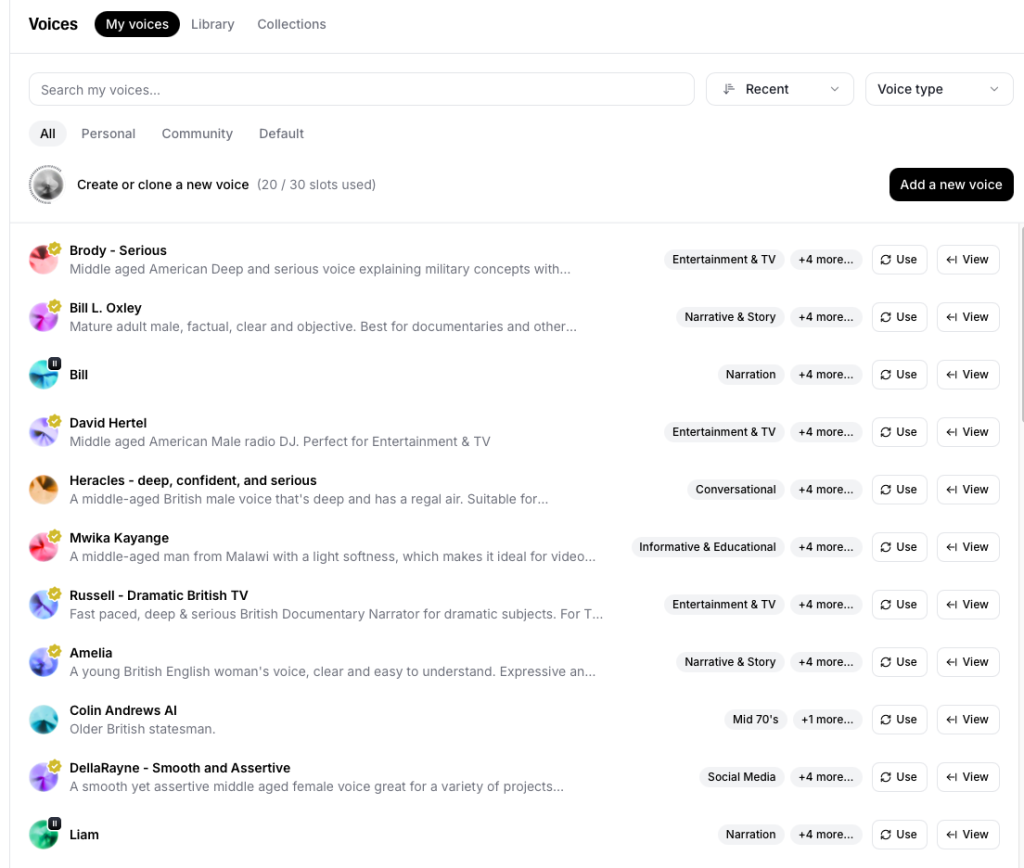

Finding the right human voice can be a time sucking challenge. If you hit up freelancers, you’re limited to what they can do. If you go for an agency, you have more options, but are still usually limited by geography or language. With Eleven Labs voice library, you have a much larger pool of voices from all around the world to choose from. A wide range of accents and vocal types from narration and storytelling, to an energetic morning DJ or a serious news anchor tone. It’s easy to swap between voices to see what sounds best.

Above: Some of the voices available in Eleven Labs.

The biggest issue Eleven Labs has at the moment is the ability to control emotion and inflection…The AI doesn’t generate vocal nuance or emotion with much variation which can result in an inappropriate or inconsistent tone.

3. Cost

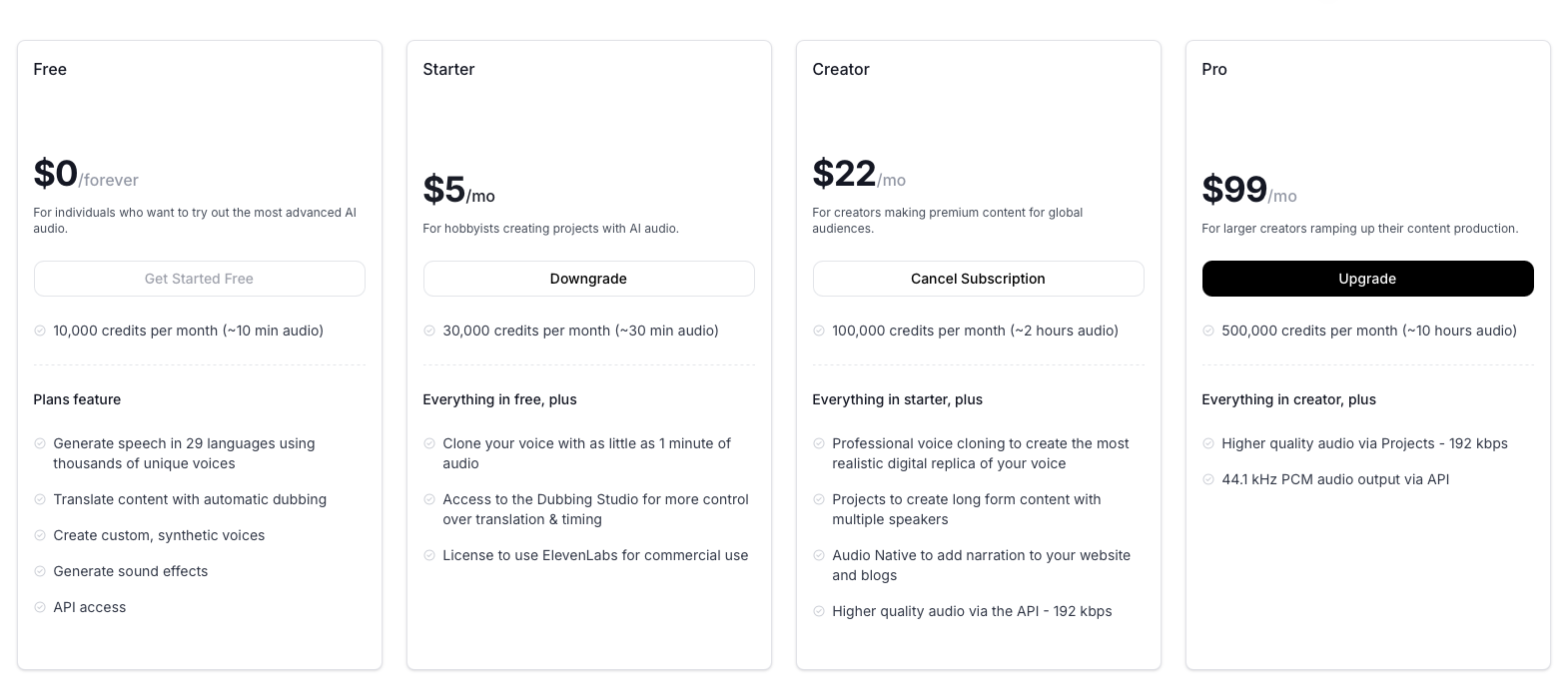

Cost is the most important difference between using AI VO and a real human. Eleven Labs Pro subscription is $22 / month. There’s no human voice artist that can work for $22 / month and provide endless voice overs for content that is only limited by your ability to provide it and the number of credits you have. For production crews like, The Chophouse that work mostly with NGO’s and educators, cost is a huge factor. Before Eleven Labs, we would often do our own VO’s to save the client some money. This limits the client to whatever we’re able to do. Eleven Labs has opened the door to a world of VO options at a cost that beats any human freelancer or agency.

That wraps the pros for Eleven Labs: Speed, Availability, Variety, and Cost. Now let’s get into the con’s. Yes…Eleven Labs is a huge leap forward in AI assisted video production however, it still needs some work to be a pro level human VO replacement.

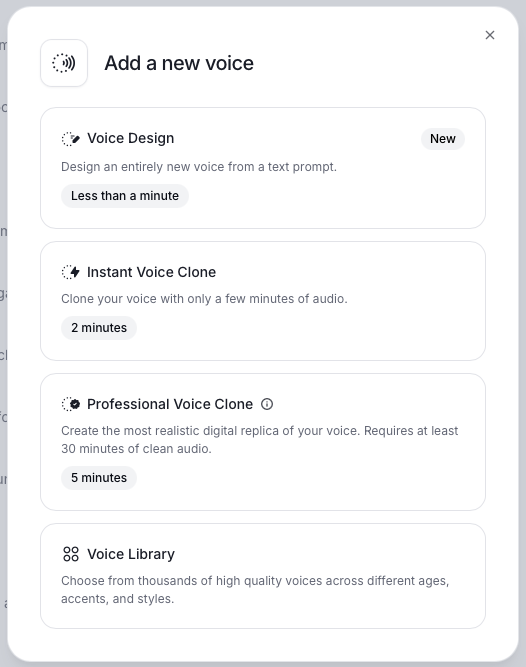

Above: The voice cloning options in Eleven Labs.

Cons

- Emotion and inflection

The biggest issue Eleven Labs has at the moment is the ability to control emotion and inflection. There’s some settings you can tweak to try and get more emotion. You can use grammar, exclamation points, question marks.. But they often do not result in the right inflection. You can also write content like you would see it in a novel, adding the emotion at the end.

“I will do what I want!” he said in anger. That works some of the time to get the emotion you want. But you have to edit out the audio at the end because the AI will say it all, as if reading a book out loud. Listen to the example below.

Emotion example

This is one place where a real human outperforms Eleven Labs. A professional VO artist would know how to read the line right and could give you several variations of that line to choose from with an array of emotions and inflections. The AI doesn’t generate vocal nuance or emotion with much variation which can result in an inappropriate or inconsistent tone. It’s not reading the script like a human and recognizing the emotions and tone of the complete scene. It’s also limited by the emotional range and quality of the original voice recording that the AI is modeling. That’s the next issue with the platform.

2. Voice recording quality

Eleven Labs has a large selection of professionally recorded voices to choose from. It also has a number of voices submitted by freelance VO artists. These real voice samples are used by the AI to build your voice model. The professional recordings are all good quality, but are limited to one vocal emote style. You can choose an energetic happy voice or a serious low key voice. A frantic DJ, or a mellow grandma to read bedtime stories. But the emotions are limited to that one state. Many of the freelance voices are low quality with room reverb or hiss. Many are over compressed as if recorded and uploaded on a phone. They sound thin and lack EQ. The emotion may be right, but the voice quality is insufficient.

Another issue with the recording is breath. You’ll hear it added in because the person in the recording left it in. This stuff has to be cleaned up unless your looking for that affect.

Above: The voice by prompt UI in Eleven Labs

Listen to the example below. This is a new feature that lets you design a voice by prompt. You get three options. You can hear the quality and volume levels vary between voices. I left the audio raw to highlight these differences.

Voice by prompt sample

You can also upload your own voice samples to work with. This is a really handy option. There are two levels of voice quality. An “Instant Voice Clone” that requires 5 minutes or less of recorded voice, and a pro version that requires 30 minutes of high quality audio to completely clone a voice. We haven’t tried the pro clone yet. I did an experiment with the instant clone on my “mellow DJ” voice. It’s very subtle, and the AI was unable to do much with it emotionally. The more expressive the samples, the more expressive the AI can be.

3. Export options

The audio quality on export is limited to 128kbps for most plans, which is fine for social media. Not best for films or anything thast requires a higher quality recording. The Chophouse pays for the creator level plan. This plan incudes 192kbps audio, but only via the API. I doubt most people know how to do that and it’s not worth it for 192k over the standard 128k. At the business plan level $99 / month, you cen get 44.1 PCM audio via the API. I imagine larger production houses would benefit from this plan. Most small shops wouldn’t bother. I would love to see a 256k or 320k option via export.

Above: December 2024 first tier price plans for Eleven Labs.

Hybrid is best… for now

You could argue that what Eleven Labs is doing right now is a “hybrid” approach to voice modeling because all of their voices are real human samples. To get the best VO possible, working with a human voice over artist or the real voice you want to model is the way to go. The built in voices are great for social media and even an online trailer. For more control and long term projects involving festivals or network broadcast, having a human voice of your own to model gives you the best of both worlds.

At the Chophouse, we’ve used this hybrid approach for a number of projects. The process looks like this.

- Create an instant clone of our human voice using five short samples.

- Develop a script and test the clone.

- Send portions of the script to our human voice to read with the correct emotion and inflection.

- Upload the new sample to the AI model.

- Repeat as needed.

By giving the human voice only small portions of the script to read and letting the AI do the rest, we cut costs and speed up the process. This is especially true if you’re cloning a speaker that’s not used to doing voice work. Mistakes eat up time. This limits how many they can make.

Another example is replacing portions of an out of date VO by using the existing audio to create a clone, then scripting the new copy. In the example above, we cloned Tiyeni Chair, Colin Andrews’ voice and used it to change a few lines he says in the video. His voice sounds the same, but the room tone and EQ aren’t perfectly matched, so it sounds like he’s in anther room. With music and B-roll, it isn’t an issue. We could have asked Colin to redo the script, but it would have taken longer and his time is better spent elsewhere.

Hiring the right voice and cloning it is a great hybrid approach that offers the best solution right now. If you’re a small shop like The Chophouse, you often do your own voice work. Creating a pro level clone of your voice with a range of emotions and inflections gives you a fast and efficient way to crank out voice work for projects.

Even without the human voice on hand, you can get some great results from Eleven Labs. In the trailer for Tiyeni below, the voice is one of Eleven Labs AI options. It’s pretty amazing that we found a Malawian native voice speaking English with decent audio quality in Eleven Labs voice library. That says a lot about the global reach of this service. The AI voice sounds very realistic because Malawian inflection and emotion are much more subtle and quiet than Western voices.

There are several newer features in Eleven Labs that I haven’t messed with yet. Things like Audio Native, a code embed that will read your web pages as an audiobook. Conversational tools like generating a podcast based on a website or video content. We’ll explore those in another post.

It’s not perfect… but for video production on a budget Eleven Labs is a game changer. The hybrid approach offers the best way to cut costs, improve production efficiency, and still work with a human voice to deliver the best results. Professional voice artists should create a pro clone of their voices and start marketing their services differently. There is still a need for high quality voice work with proper emotion and inflection. AI hasn’t killed the VO industry yet… Just made it a lot more affordable, easier, and faster. A worthwhile expense for any production house.